Different Channel, Different Video, Similar Patterns

Can This Go Live? #3

2025 July 5

Disclaimer: The following is an educational analysis based on the author's interpretation of publicly available data using the framework outlined in this post. It constitutes the author's opinion and is not a definitive assertion of fact.

What is this blogpost about?

This is another addition to the blogpost series "Can This Go Live?". The first blogpost outlining the series' focus and the intended audience is here.

What did I stumble upon?

About the video

- Platform: YouTube

- Title: Conan & Matt Gourley Have Beef With Beets | Conan O'Brien Needs A Fan

- Creator: Team Coco

- Publication date in UTC timezone: 2025-07-03

- Interaction date in UTC timezone: 2025-07-03

About my interest in the video

One of my friends is incredibly funny. In 2010, in the midst of his jibber jabber of then contrarian takes such as "Modern Family is a funnier show than Friends" and "Dwyane Wade and Lebron James won't win a championship, two alpha dogs can't coexist as one"1, my friend began posting frequently about Conan O'Brien. His Facebook wall was filled with "I'm with Coco" pictures and quotes. Because I found my friend incredibly funny and he was showing immense support for Conan O'Brien's comedic works, I started consuming Conan's bits. After that, I've been a big fan of Conan's work, and I listen to his latest bits on his YouTube channel and other channels that host him2.

About how I stumbled upon the examples

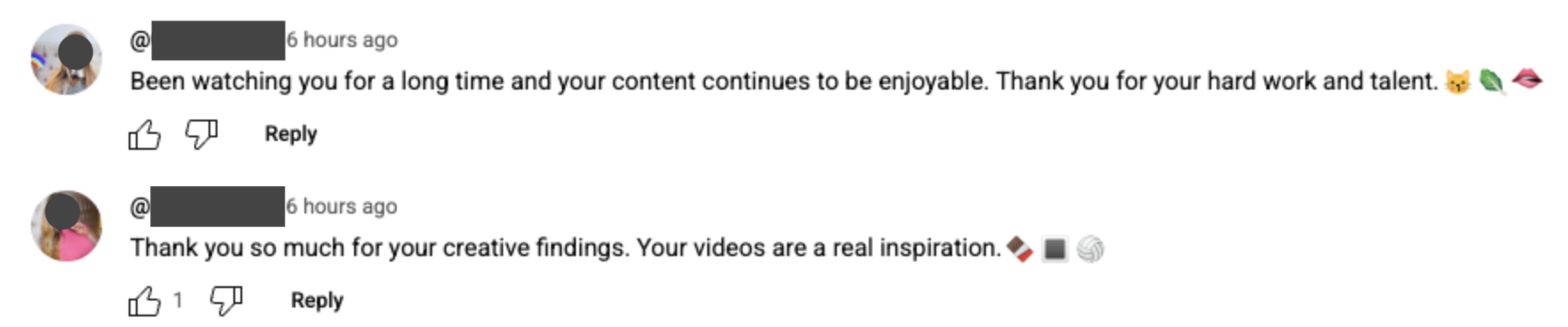

After publishing the first two blogposts in this series, I continued doing my routine scrolling of the comments section by sorting comments using the “Newest first” option. While watching the latest video by Team Coco, I noticed patterns similar to those previously described. At the bottom of the section, I came across 2 accounts. For the remainder of this blogpost, these 2 accounts will be referred to as “G1 accounts”. The accounts posted comments similar to the posted comments by accounts written about in the "Can This Go Live? #2" blogpost. The posted comments (i) had the sentiment of gratefulness for the creator's work, (ii) had a similar content structure of 2 sentences followed by 3 emojis, (iii) had gibberish emoji sequences (e.g. 💖🍁✨ and 🍫🌹📸). See Figure 1.

Figure 1: Comments with some recognizable patterns.

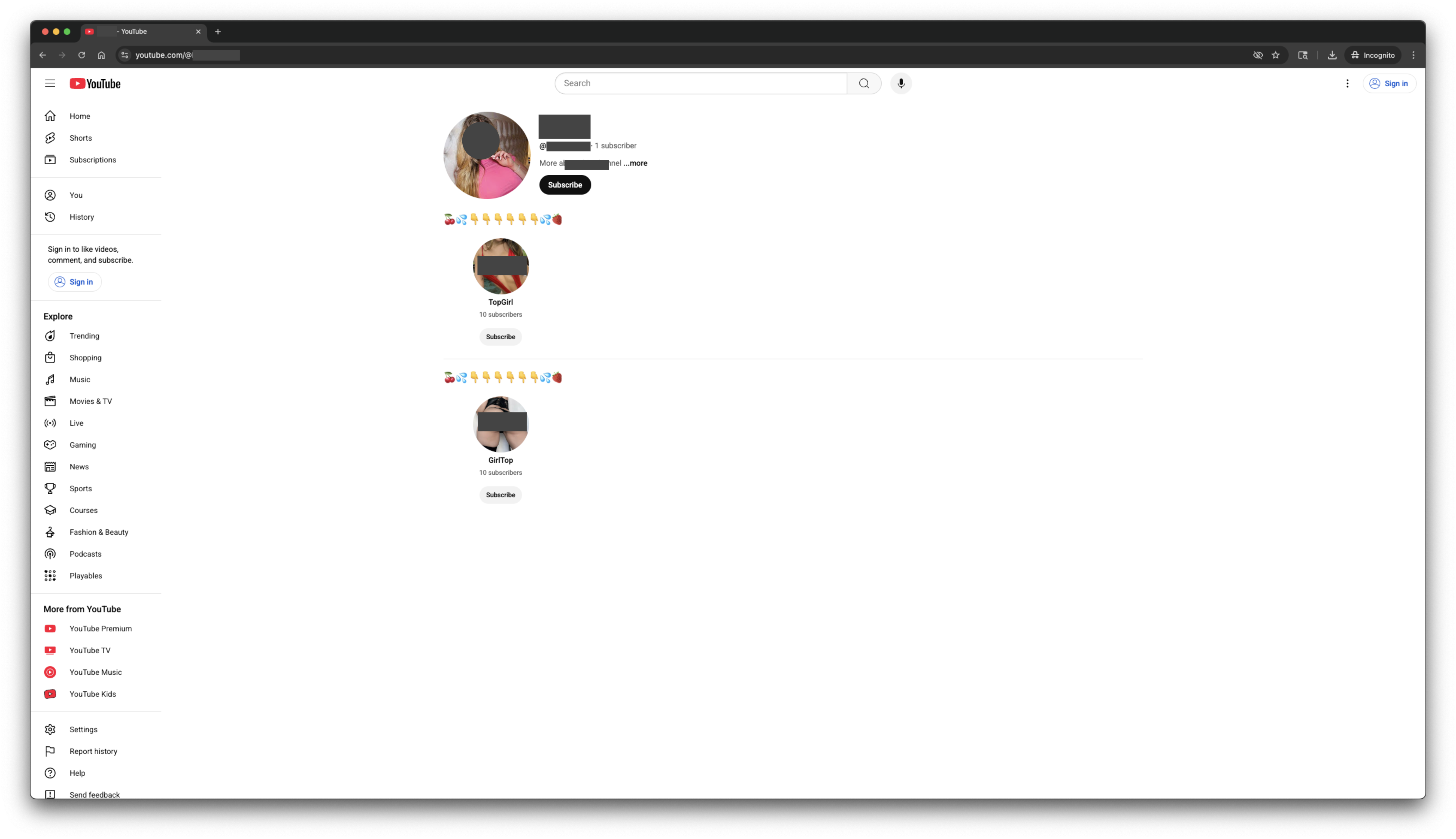

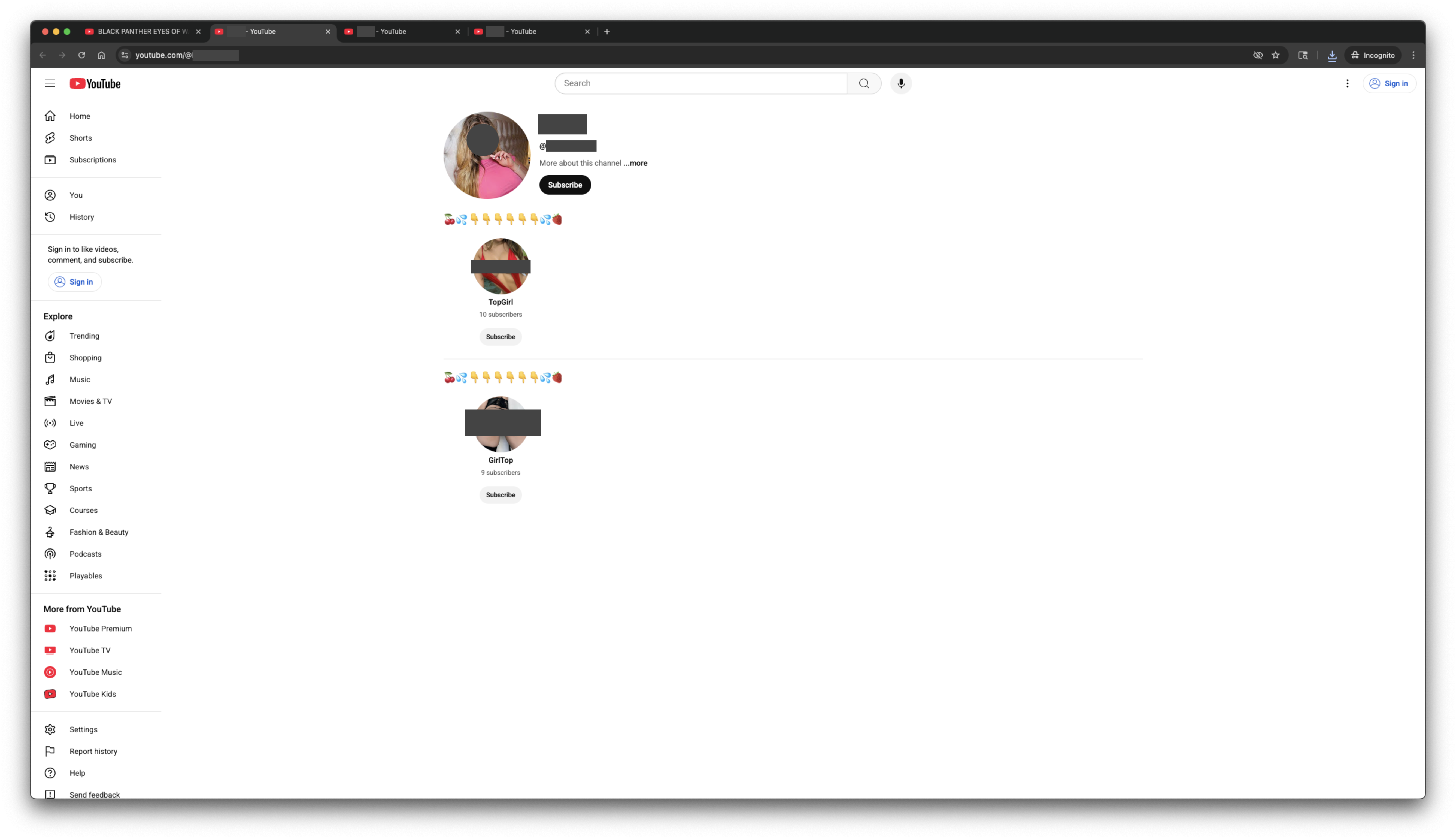

Continuing the comparison between the activities/behaviors in this current Team Coco video and the activities/behaviors in the previous Emergency Awesome video, one account in this current video and another account in the previous video have the same account profile picture3. See Figures 2 and 3 and Table 1.

Figure 2: The account profile of a comment poster on the current video by Team Coco.

Figure 3: The account profile of a comment poster on the previous video by Emergency Awesome.

| Team Coco video | Emergency Awesome video | |

|---|---|---|

| Account figure | Figure 2 | Figure 3 |

| Account profile picture4 | P | P |

| Account name5 | A | B |

| Account username6 | A-a1b2c | A-d3e4f |

| Account description | Links to 2 accounts: X1, X2. | Links to 2 accounts: X1, X2. |

| Creation date | 2025 July 3 | 2025 July 3 |

Based on Table 1, the accounts may be perceived as "near-duplicate" accounts because of:

- Same picture

- Similar usernames

- Same links

- Same creation date

Summary of the seemingly problematic activities/behaviors

Based on the exploration of publicly available information, there is reason to believe that there is a group of coordinated, inauthentic, near-duplicate accounts acting in such a way as to (1) direct users to collection of on-platform accounts and then (2) once more direct users to off-platform products/services (e.g. URLs hosted in "beacons.ai"7). A hypothesis for the group's incentive to do this is that the group aims to increase traffic to malicious digital products/services while minimizing detection workflows by using (1) the multi-layered redirection, and (2) a group of newly created, near-duplicate accounts with similar comment posting behaviors. For a more comprehensive outline of what the group of coordinated, inauthentic, near-duplicate accounts do, refer to the process outline in the summary section of the "Can This Go Live? #2" blogpost.

What are some thoughts after the walkthrough above?

Since the previous blogpost, I looked through YouTube's Creator Insider YouTube channel to find videos which spoke about spam, because I believe that folks at YouTube are likely aware of and working to resolve the problematic patterns. This video from July 2021 shares some information on mitigation efforts focusing on comments spam. Based on my understanding of the video, comments spam are a longstanding and evolving issue, and mitigation efforts will bring the spam asymptotically closer to 0. Also, this video from April 2023 shares some information on the community guidelines policy development and (program-drive and person-driven) enforcement process. Based on my understanding of the video, spam seen in production could be due to (1) an active detection workflow needs more time to take effect, (2) an active detection workflow made a debatable judgment call on a nuanced edge case, or (3) detection workflow needs enhancement or needs to be created.

After watching the videos, these are the questions I have in mind:

With regards to the scale of this issue

- How many coordinated, inauthentic, near-duplicate networks are being operated?

- How many accounts are in the networks?

- How many subscribers does the network have, and how many of those subscribers are not operated by the network?

With regards to the impact of this issue

- What is the network's targeting approach? Is it based on channel-level factors (e.g. creator background), video-level factors (e.g. video content category) or audience-level factors (e.g. language)?

With regards to mitigation of this issue

- What channel-level signals are being used to prevent and reduce inauthentic accounts?

- What is the effectiveness and what are the challenges of using profile pictures as a signal?

- Can the detection workflows be run more frequently? What are the tradeoffs, and are the tradeoffs justifiable?

- Can the detection workflows handle nuanced edge cases better? What are the tradeoffs to change the relevant policies and/or the processes and/or the programs, and are the tradeoffs justifiable?

- Are new detection workflows needed?

- Can content creators better use existing tools such as "Blocked words" functionality of the comment settings8?

- Can content consumers better use existing tools such as "Report" on a comment and/or video and/or channel level?

- Are new tools needed such as being alerted that a profile picture is frequently used or that an account username is highly similar to other account usernames?