Can This Go Live? #1

2025 July 3

Disclaimer: The following is an educational analysis based on the author's interpretation of publicly available data using the framework outlined in this post. It constitutes the author's opinion and is not a definitive assertion of fact.

What is "Can This Go Live?"?

A series of blog posts that show examples of online activities that I:

- Stumbled upon by interacting with the online community.

- Was surprised to see due to at least one potential community guideline violation.

What is this blogpost about?

This is the first blogpost in the series "Can This Go Live". To start off the series, the examples will likely skew to examples seen on the major user generated video content (UGVC) platforms written about in this blogpost.

Who might this blog post be interesting to?

- For analysts focusing on platform trust & safety and/or platform integrity workflows.

- For video creators seeking more information on spam behaviors, patterns and trends.

- For users seeking more information on how to identify spam and minimize interactions.

- For folks interested in learning more about spam behaviors, patterns and trends.

What did I stumble upon?

About the video

- Platform: YouTube

- Title: The Lakers Just Signed A Silent Killer

- Creator: GOAT

- Publication date in UTC timezone: 2025-07-01

- Interaction date in UTC timezone: 2025-07-02

About my interest in the video

The 2025 summer NBA free agency period started on 2025-06-30 at 18:30 US ET. As part of the media's coverage, some YouTube channels like GOAT started releasing player movement video reviews. Due to my decision to hit the channel's subscribe button at one point in time, I saw the channel uploaded the interesting video.

About how I stumbled upon the examples

While watching videos across UGVC platforms, one reason I like reading the comments is to make the viewing experience feel more like a community experience. During my routine scrolling activity, I came across the following comment.

Figure 1

The comment was surprising because:

- The profile picture was of a person dressed provocatively.

- The comment like count seemed unusually high in comparison to the other comments in the video discussion section.

As points 1 and 2 piqued my curiosity, I clicked into the poster account's profile.

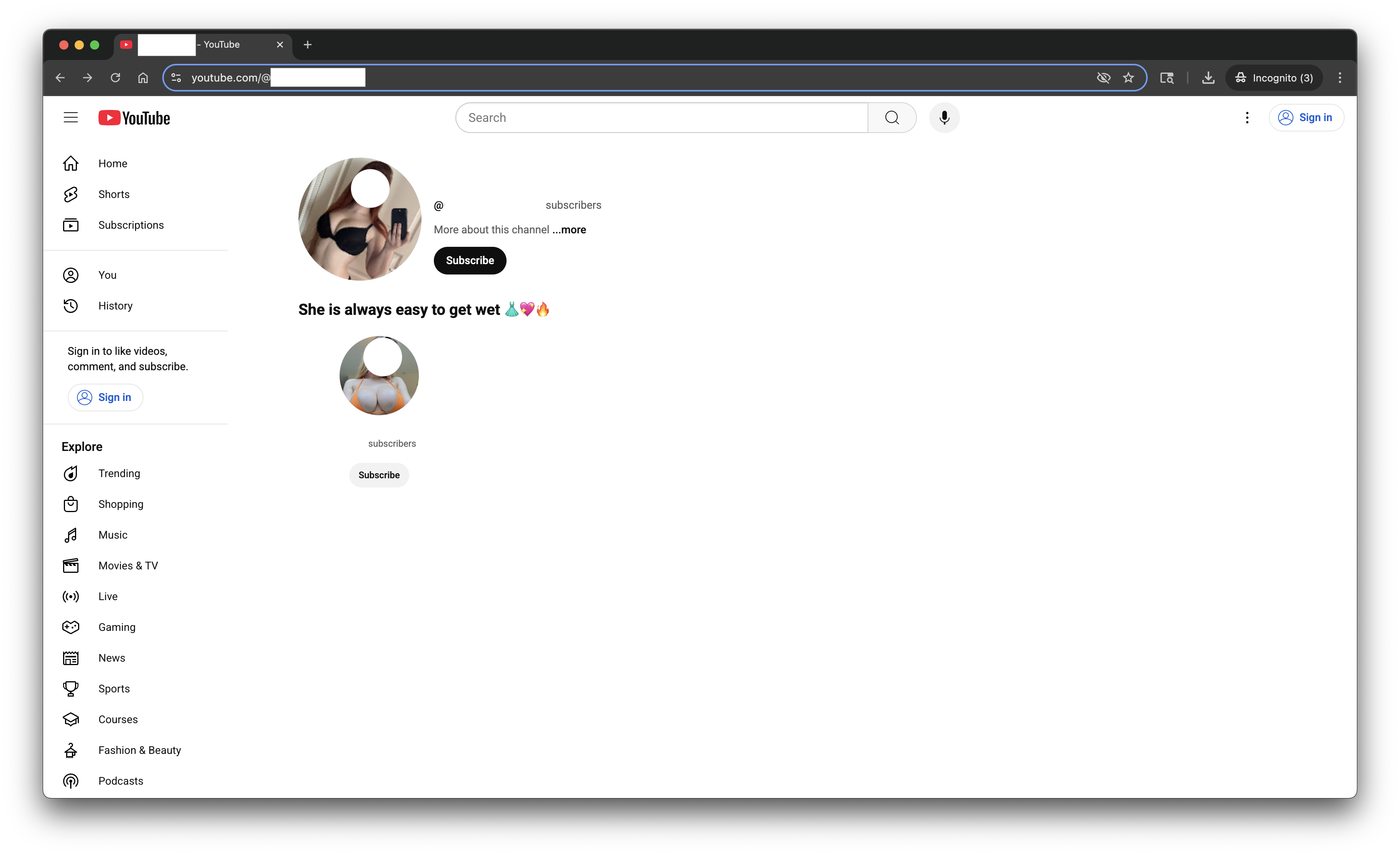

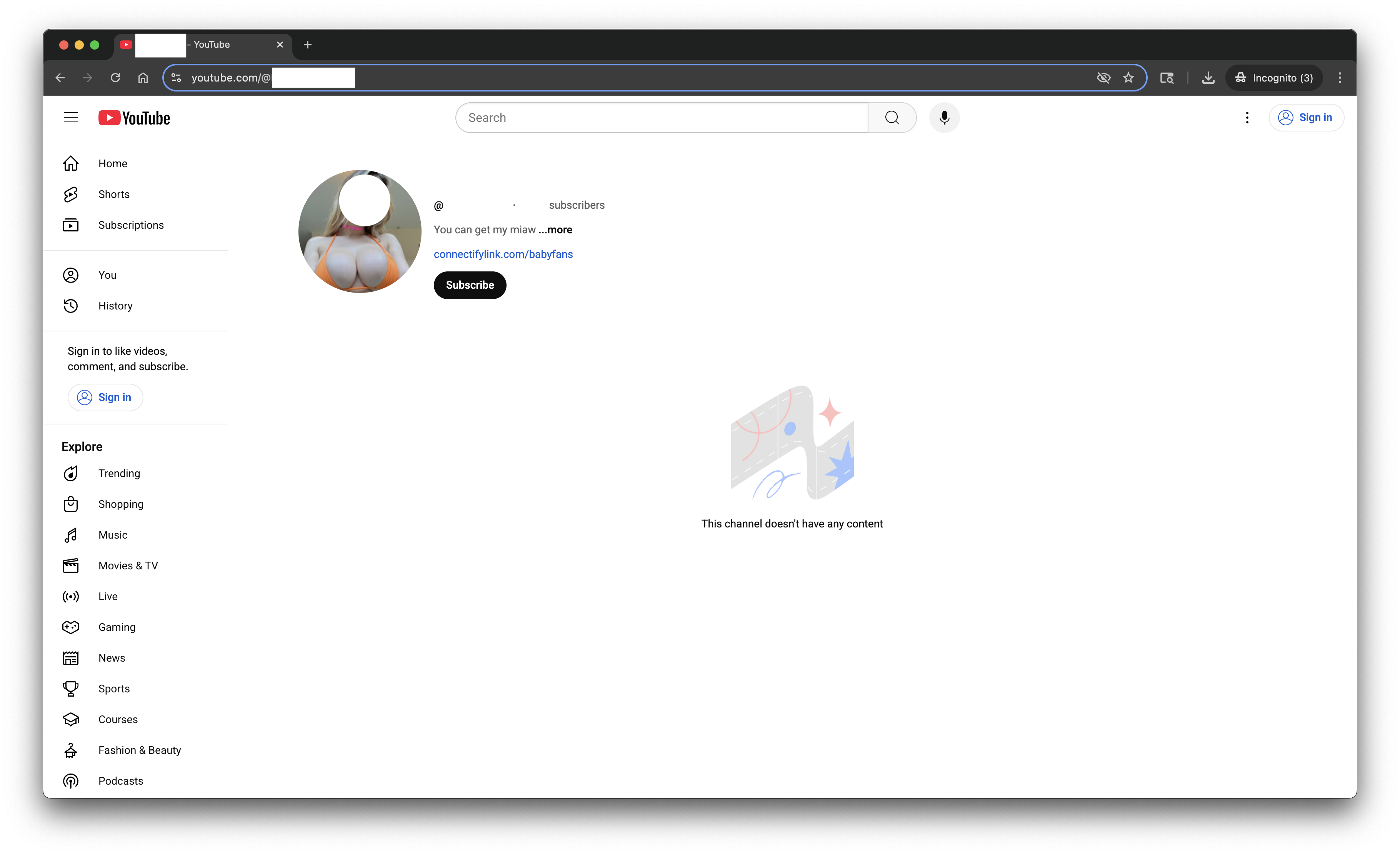

Figure 2

The account profile page is surprising because:

- There is sexually themed text.

- There is a link to another account profile page with a profile picture of a person dressed provocatively.

As points 3 and 4 further piqued my curiosity, I first did a Google Search of the text.

Figure 3

Second, I did a Google Search of the profile pictures in points 1 and 4. The profile picture in point 1 returned no matches, and the profile picture in point 4 returned exact matches.

Figure 4

Third, I clicked into the account profile page mentioned in point 4. The profile page has an off-platform/outbound link "connectifylink.com/babyfans". According to both the Gemini model response and the ChatGPT model response, the link is a malicious link.

Figure 5

Summary of the seemingly problematic activity/behavior

The information above indicates a group of accounts engaged with video content by adding inauthentic interactions to video discussion comments. Possibly in the hopes of making a comment more eye-catching to a person reader and/or more relevant to a program's ranking algorithm, the "highlighted comments" then draw a user to an account's profile page. Let this profile page be known as account 1 or A1. After a user arrives at A1's profile page, the user encounters eye-catching text and eye-catching link(s) to other on-platform accounts. Let's call this account/these accounts A2. Possibly in the hopes of successfully enticing a user to click on one of those links, after a user arrives at A2's profile page, the user encounters a link to an off-platform digital product/service. For this flow to be worth the work put in by a group of accounts, that product/service has a considerable chance of being a malicious product/service.

What are some thoughts after the walkthrough above?

I have work experience in the trust & safety landscape, and these examples look like they possibly violate these two sections of the YouTube community guidelines: "Nudity & Sexual Content Policy"" and "Thumbnails policy". As result, the walkthrough may raise the following question for analysts working on platform trust & safety and/or platform integrity workflows:

- a. Do these examples violate at least one of the two guidelines? The published guidelines prohibit very clearly problematic activities and behaviors on the platform. "Very clearly" is a critical part of this, because the platform must balance between maintaining an awesome community experience and growing the community size. As such, the examples I stumbled upon are best reviewed by someone with community guidelines subject matter expertise to determine if the examples do comply or do violate the guidelines.

- b. If "yes" to #1, what is the impact of the issue to the following ecosystem participants? Ecosystem participants required to operate the ecosystem may be considered the highest priority. Following this prioritization, content consumers, content producers, advertisers, and video platform providers would be assessed first. Afterwards, other ecosystem participants such as regulators, software/hardware service providers, and creative process service providers can also be considered. Considering that there is a malicious link involved in this experience, financial harm may be the most likely type of harm given that the examples look like they could be part of a romance type scam.

- c. If "yes" to #1, how prevalent is this issue? The number of occurrences and the frequency of these occurrences is important in discovering this issue and ultimately prioritizing the resolution of the issue.

- d. How are potentially problematic keywords/phrases detected? "Get wet" is a known sexually themed phrase for sexual arousal. However, it is possible that the phrase's text complies with the community guideline for Nudity & Sexual Content Policy. Again, a community guidelines subject matter expert is best suited to make a judgment on guideline compliance or guideline violation.

- e. How are potentially problematic texts then used? Potentially problematic texts could be useful as a means to find other accounts that use the same n-gram. However, it appears that if a n-gram-driven detection approach were to exist, then it would require that the n-gram is classified as problematic. Therefore, question (d) becomes even more relevant.

- f. What are problematic keywords/phrases that are currently being used by "bad actors" and are not detectable by program-driven approaches? Following questions (d) and (e), if there are "potentially problematic" cases, then there are also "certainly problematic" and "certainly ok" cases. Therefore, "certainly problematic" cases are probably being used by bad actors and probably going undetected.

- g. How are commonly referred to accounts detected? For example, the account in point 4 was observed to be referred to by at least 3 unique account profile pages.