Can This Go Live? #2

2025 July 4

Disclaimer: The following is an educational analysis based on the author's interpretation of publicly available data using the framework outlined in this post. It constitutes the author's opinion and is not a definitive assertion of fact.

What is this blogpost about?

This is another addition to the blogpost series "Can This Go Live". The first blogpost outlining the series is here.

Who might this blogpost and series be interesting to?

- For analysts focusing on platform trust & safety and/or platform integrity workflows.

- For video creators seeking more information on spam behaviors, patterns and trends.

- For users seeking more information on how to identify spam and minimize interactions.

- For folks interested in learning more about spam behaviors, patterns and trends.

What did I stumble upon?

About the video

- Platform: YouTube

- Title: BLACK PANTHER EYES OF WAKANDA TRAILER 2025: Welcome Back Iron Fist

- Creator: Emergency Awesome

- Publication date in UTC timezone: 2025-07-03

- Interaction date in UTC timezone: 2025-07-03

About my interest in the video

The fictional world of the Marvel universe has captivated me since my childhood. I would watch the cartoons whenever they aired on TV, I would borrow the VHS tapes I could find on a shelf, and I would read the comics I could buy thanks to the generosity of my parents. When the Marvel Cinematic Universe was created, there would be many "easter eggs" that would delight fans familiar with the Marvel universe's many other canonical works. To make sure that I noticed and understood all of those "easter eggs", I started watching Emergency Awesome. Since first watching the channel's videos ~10 years ago, I eventually hit the channel's subscribe button, and I have clicked¹ into almost all videos ever since.

About how I stumbled upon the examples

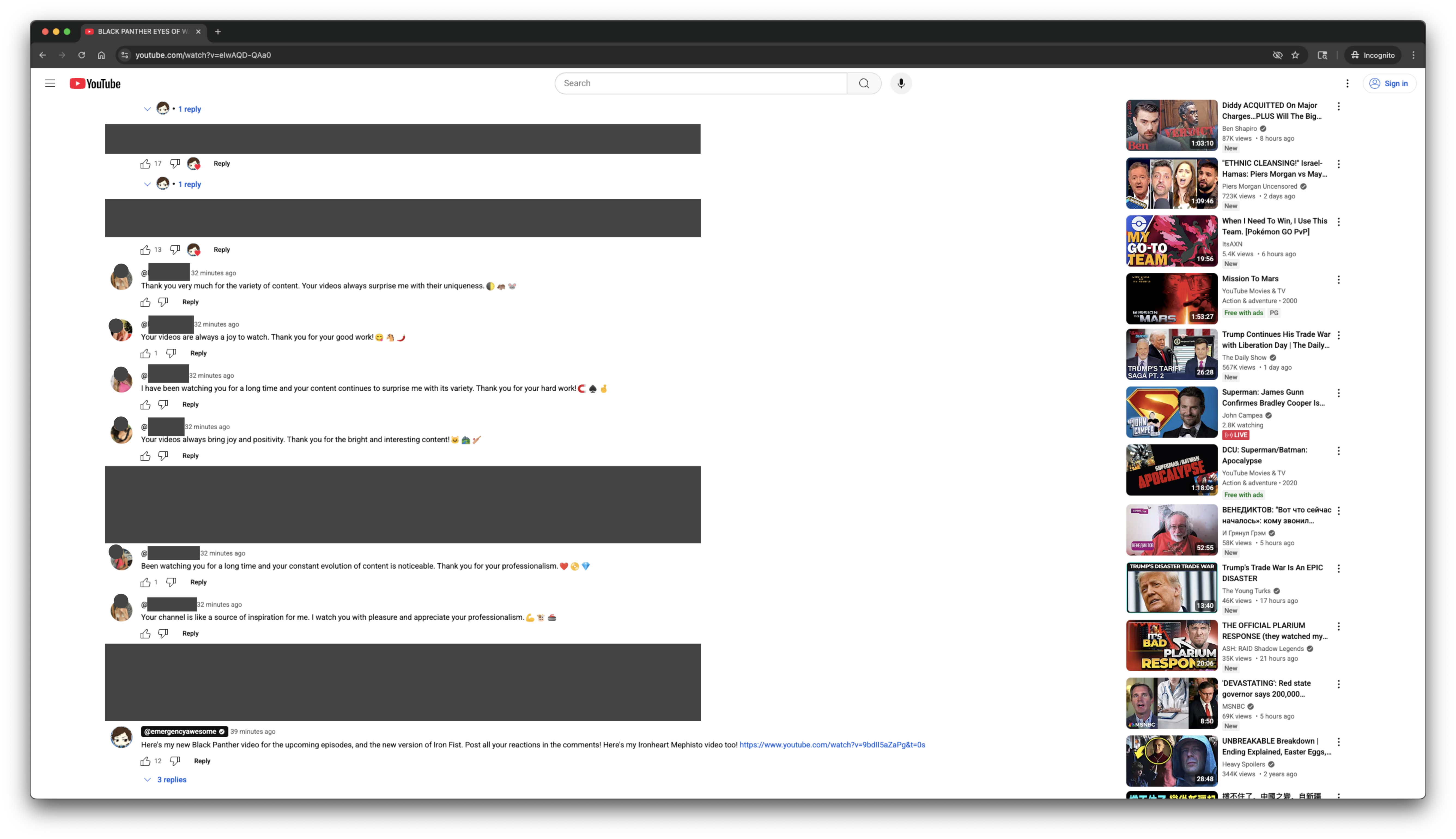

After writing "Can This Go Live #1" and while watching the video, I thought to myself, "Could there be more unexpected behaviors in the comments section?" Following this thought, I proceeded to do my routine scrolling. While scrolling, I sorted the comments by the "Newest first" option. At the bottom of the section, I came across a group of 6 accounts. See Figure 1.

Figure 1

For the remainder of this blogpost, the group of 6 accounts will be referred to as G1. G1 piqued my interest for further exploration because of the following behavior-dimension properties:

- 6 of 6 accounts posted a comment within a 1 to 2 minute time period. On its own, this could be authentic behaviors and interactions.

- However, 6 of 6 accounts posted a comment which (i) had the sentiment of gratefulness for the creator's work, (ii) had a similar content structure of 2 sentences followed by 3 emojis, (iii) had gibberish emoji sequences (e.g. 🌓🦛🐭 and 😋🐴🌶)

Moving onto account-dimension properties:

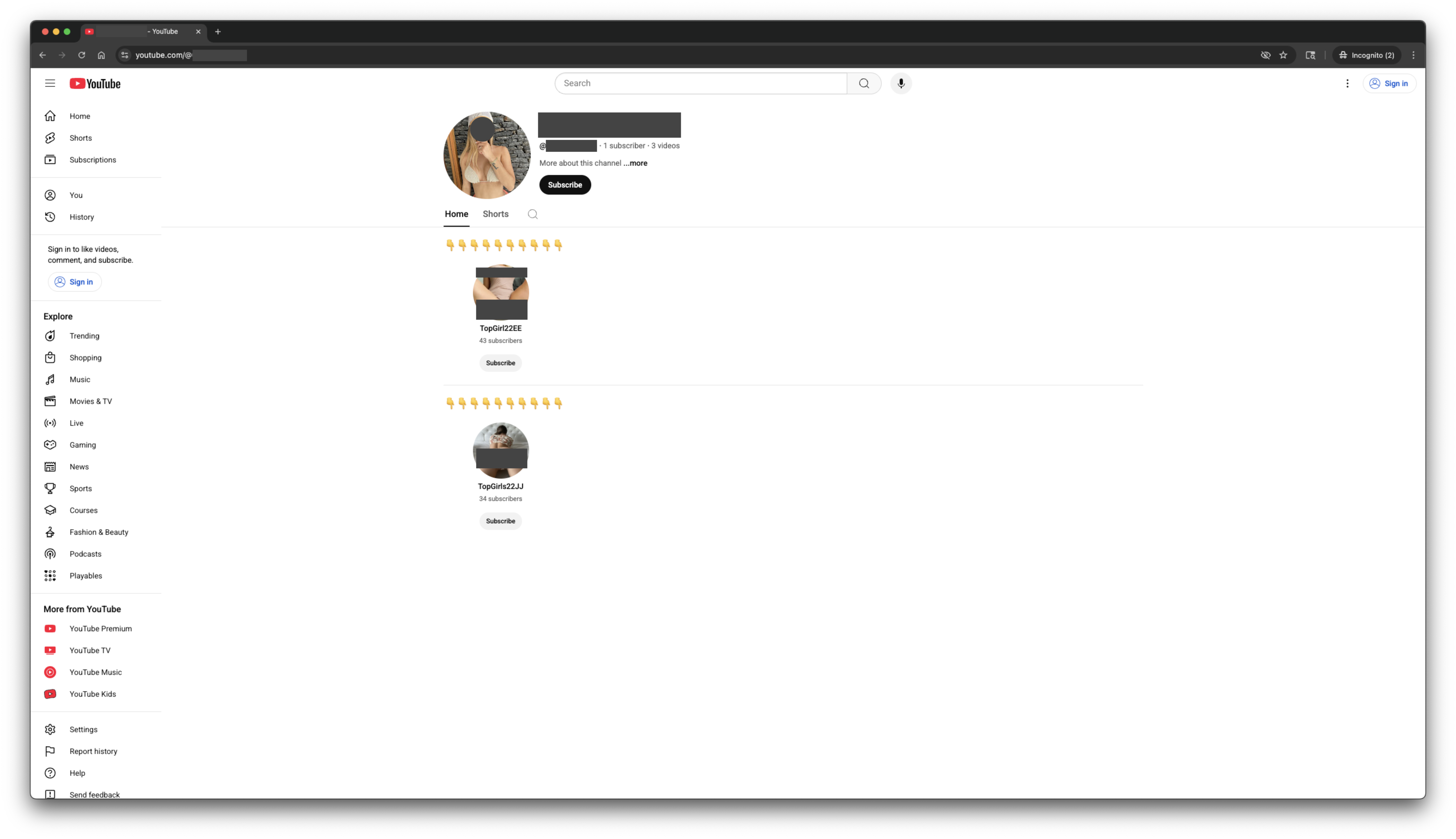

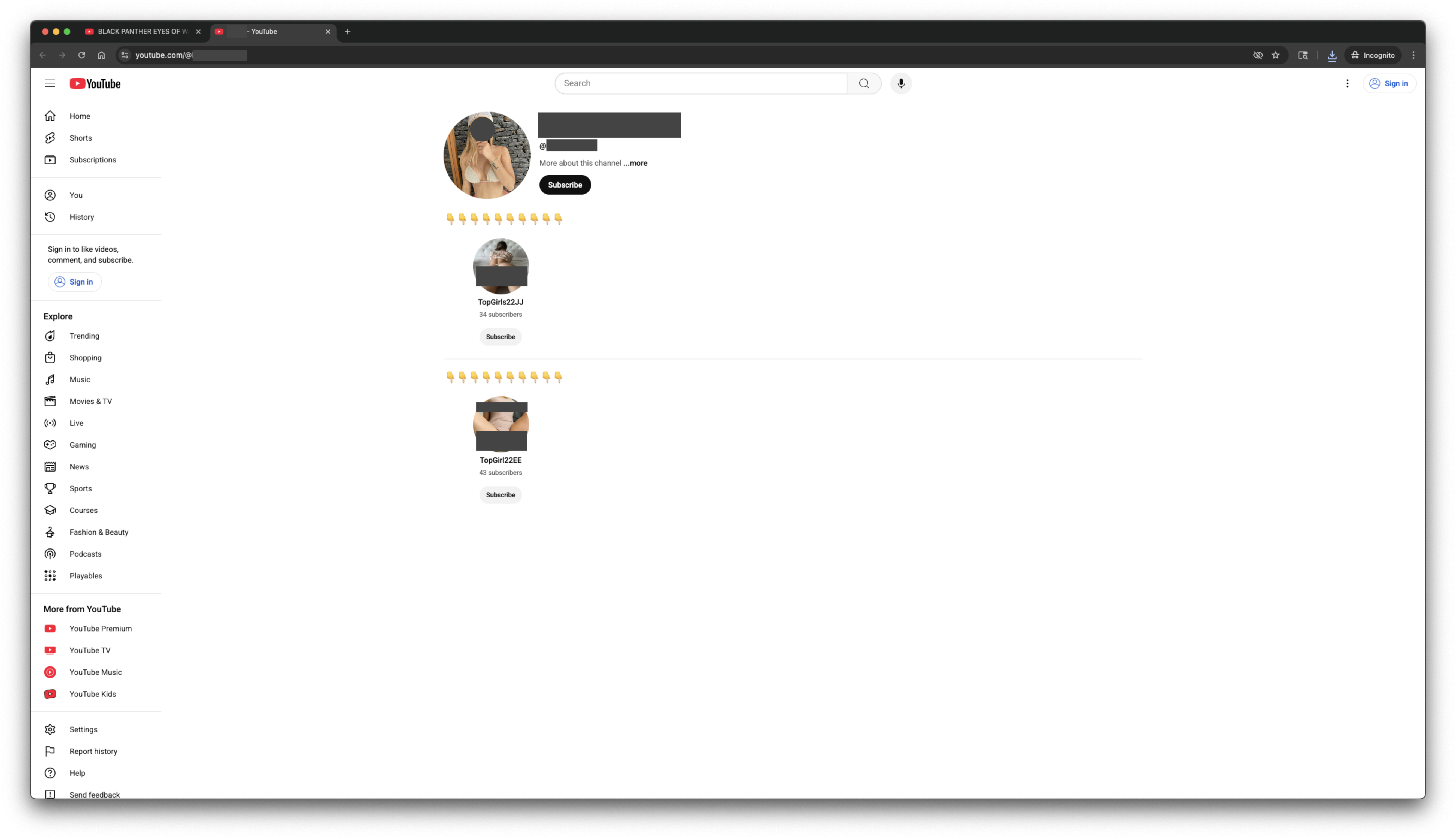

- 6 of 6 accounts had an account profile picture of an attractive, possibly eye-catching, female. In addition, 2 of 6 accounts had what appears to be the same profile picture. See Figures 2 and 3.

Figure 2

Figure 3

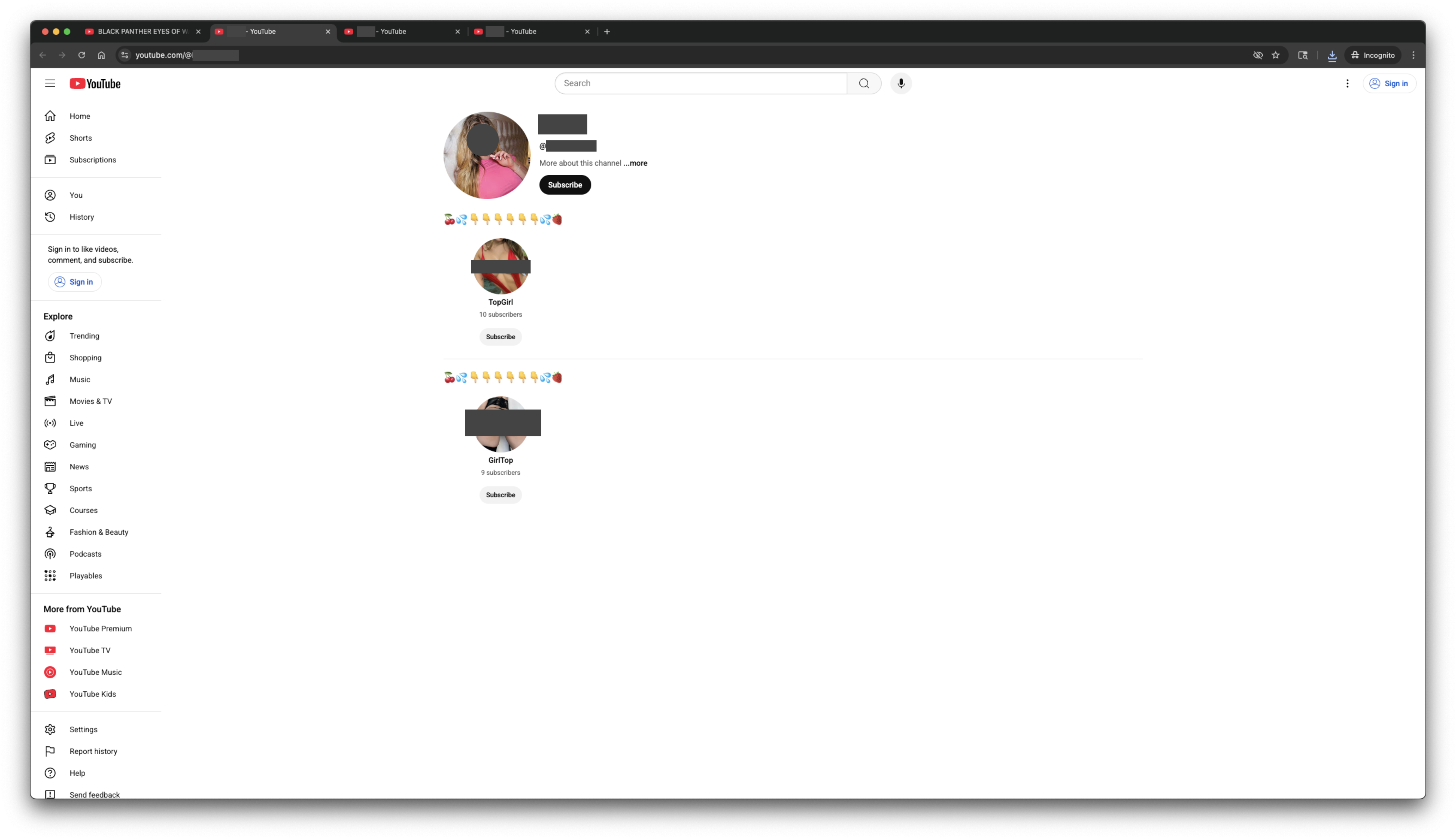

- 6 of 6 accounts had an account profile page which included eye-catching text to describe links to pairs of on-platform accounts (A1, A2) and (A3, A4). See Figure 3 to see (A1, A2) and Figure 4 to see (A3, A4).² For the remainder of this blogpost, the group of 4 accounts A1 to A4 will be referred to as G2.

Figure 4

- G2 accounts all have account profile pictures of a faceless female with an emphasis on the provocativeness of the pictures. The pictures can be categorized as part of the sexual content category, and expertise would be needed to categorize as being more or less clearly within this category.

- G2 accounts all have account profile names which could be categorized as part of the sexual content category, and expertise would be needed to categorize as being more or less clearly within this category.

- G2 accounts all have outbound links to off-platform digital products/services hosted in the "beacons.ai" domain. While the domain itself appears fine, the specific links may be malicious given the limited available knowledge in search engines and LLMs about those links.

- All accounts reviewed above were created within 2 days of the video's publication date.

Summary of the seemingly problematic activities/behaviors

Based on the exploration of publicly available information, there is reason to believe that a group of coordinated, inauthentic accounts acted in such a way as to increase traffic to off-platform products/services by using outbound links. Furthermore, given the limited, available knowledge about the links in both the indexes of search engines and LLMs, there is additional reason to believe that the domain contains malicious content.

Possibly aiming to increase traffic to a group of accounts' profile pages, G1 accounts:

- Posted positive sentiment comments to initiate engagement with the content producer,

- Posted comments soon after the video's publication,

- Posted comments quickly in succession,

- Used eye-catching, potentially attractive profile pictures of females,

- Used eye-catching, sexually suggestive text,

- Added descriptions which included links to other on-platform accounts in G2.

Possibly aiming to increase traffic to a group of off-platform products/services, G2 accounts:

- Used eye-catching, provocative profile pictures of faceless females,

- Used eye-catching, sexually suggestive profile names,

- Added descriptions which included outbound links to other off-platform digital products/services.

What are some thoughts after the walkthrough above?

Community guidelines thoughts

Based on my past work experience in the trust & safety functional space, the examples may violate these two policies in the YouTube community guideline: "Spam & deceptive practices" and "Sensitive content".

For the first policy set, the "Repetitive comments" guideline in the "Spam, deceptive practices, & scams policies" might be violated due to the similarity in comment posts by G1 accounts. In addition, at least one of the guidelines in the "External links policy" might be violated due to the off-platform links in the G2 account profile descriptions.

For the second policy set, at least one of the guidelines in the "Nudity & Sexual Content Policy" might be violated due to the G2 account profile pictures used. In addition, at least one of the guidelines in the "Thumbnails policy" may be violated due to the G2 account profile pictures used.

Given that community guideline subject matter expertise is needed to make a comprehensive judgment, the G1 and G2 accounts were reported.

Detection workflow thoughts

Assuming that these observed activities and behaviors violated at least one of community guidelines, I further thought about the detection workflow design and implementation:

- What is the chosen approach for detecting similarity between comment posts, account profile pictures, account profile descriptions and account usernames?

- Furthermore, for each of the approaches, what are the alternative approaches? Moreover, for each approach, what are the tradeoffs to consider?

- What is the scale of these problematic activities/behaviors?

- Furthermore, are there comparable problematic activities/behaviors on other video platform providers?

- What are the impacts of these unresolved issues on content consumers, content producers, advertisers and video platform providers?

What are actions to take?

For reference, here is YouTube's "Reporting and enforcement" Help Center webpage.

- For analysts, consider thinking about how these activities/behaviors may take form on the platforms that you are responsible for.

- For video creators and other content producers, consider using the platform's readily available tools to control your community and also consider making a request to the platform for enhancements and/or new community control products/services.

- For users and other content consumers, consider filing a report to the platform using the platform's readily available tools to help signal the relevance of removing and/or reducing the problematic activities/behaviors.